Wednesday, April 20, 2011

Leonardo funny robot

AR Drone to fly

movies become real Laser Gun

Iron Man Light Laser it`s become really

If you have seen the Iron Man movie the image above is sure to be familiar to you.

Patrick from Germany shared this project with us in the Hacked Gadgets forum, we have seen other cool Iron Man Repulsor Light projects before but as far as I know this is the first that it truly very dangerous.

So a word of warning, do not attempt to copy this build unless you know what you are doing! Patrick already has plans for version 2 in his head, I look forward to seeing that in the future.

The goal was to create a hand-held laser…powerful…balloons pop across the room…cuts plastic…

Made SOME laser-guns before, and the most “useless” space-eating part, was the grip.

Made SOME laser-guns before, and the most “useless” space-eating part, was the grip.

So I had to get rid of it. I am am a HUGE fan of the new Iron Man movies, so I decided to try my own design and make a glove. Took a whole weekend to make it, and another 2 days for the paint-job {made EVERYTHING myself…from metal-work, wiring, paint-job}

Technical info:

# made of 2mm brass-sheet

#constant current LM317 driver

# 445nm 1000mW laser diode

# 2x 3.7V Li Ion cells (=7.4V total)

#constant current LM317 driver

# 445nm 1000mW laser diode

# 2x 3.7V Li Ion cells (=7.4V total)

3D iPad

3D iPad Demo is future of portable media Laurence Nigay, a professor from France was inspired by the 3D work done by Johnny Lee , works for the Google now .

We track the head of the user with the front facing camera in order to create a glasses-free monocular 3D display. Such spatially-aware mobile display enables to improve the possibilities of interaction.

It`s not use the accelerometers and relies only on the front camera.

Ant navigation Robot

Tuesday, April 19, 2011

SONY DOG

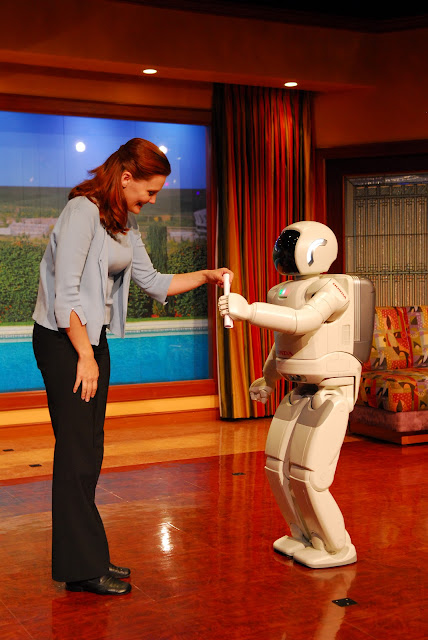

ASMIO Robot

Monday, April 18, 2011

Robots can Full of Love

However they are assisting the elderly, or simply popping human skulls like ripe fruit, robots aren't usually known for their light touch. And while this may be fine as long as they stay relegated to cleaning floors and assembling cars, as robots perform more tasks that put them in contact with human flesh, be it surgery or helping the blind, their touch sensitivity becomes increasingly important.

Thankfully, researchers at the University of Ghent, Belgium, have solved the problem of delicate robot touch.

Unlike the mechanical sensors currently used to regulate robotic touching, the Belgian researchers used optical sensors to measure the feedback. Under the robot skin, they created a web of optical beams. Even the faintest break in those beams registers in the robot's computer brain, making the skin far more sensitive than mechanical sensors, which are prone to interfering with each other.

Robots like the da Vinci surgery station already register feedback from touch, but a coating of this optical sensor-laden skin could vastly enhance the sensitivity of the machine. Additionally, a range of Japanese robots designed to help the elderly could gain a lighter touch with their sensitive charges if equipped with the skin.

Really, any interaction between human flesh and robot surfaces could benefit from the more lifelike touch provided by this sensor array. And to answer the question you're all thinking but won't say: yes. But please, get your mind out of the gutter. This is a family site.

Via New Scientist

Honda U3-X no more traffic

It's a nifty little device: essentially a sit down Segway unicycle that looks like a figure-8-shaped boombox, with a pop-out seat and footrests.

The machine balances itself, with or without a rider.

You move and steer by leaning where you want to go, forward, backward, and -- in a unique twist - side to side. That's thanks to an impressive new wheel Honda's developed that's actually constructed from a bunch of much smaller wheels that can rotate perpendicular to the main wheel. The balance is very easy and intuitive - possibly too much so, as overconfidence can lead to a sideways pratfall .

It's pretty compact and weighs in at roughly 22 pounds, which makes it easy to pick up by the handle and lug up a flight of stairs. But the fastest it'll go is about 4 miles an hour, just a brisk walking pace, and the lithium-ion battery runs for just about an hour, so it's hard to imagine what the potential market for this thing would be.

Honda spokesman suggested it could be used by security guards who need to patrol around a site, or rented out to museumgoers so they can browse from painting to painting for an hour or so without tiring their tootsies. Although the high-pitched vacuum-cleaner-like whine from the motor might be a bit distracting to other art lovers.

Probably the most likely nearish-term use of the technology on display here would be to re-purpose the innovative wheels onto conventional wheelchairs, allowing for far greater lateral mobility. For now, Honda's got no plans to bring this to market, and no guess at what the price would be if and when it did.

Honda's Omni Traction Drive System: The wheel of the U3-X is made up of a series of smaller independent wheels that rotate perpendicular to the main one Honda

via popsci

Sunday, April 17, 2011

Swarm Robot

Use Microsoft Surface to Control With Your Fingertips

Sharp-looking tabletop touchscreen can be used to command robots and combine data from various sources, potentially improving military planning, disaster response and search-and-rescue operations.

Mark Micire, a graduate student at the University of Massachusetts-Lowell, proposes using Surface, Microsoft's interactive tabletop, to unite various types of data, robots and other smart technologies around a common goal. It seems so obvious and so simple, you have to wonder why this type of technology is not already widespread.

In defending his graduate thesis earlier this week, Micire showed off a demo of his swarm-control interface, which you can watch below.

You can tap, touch and drag little icons to command individual robots or robot swarms. You can leave a trail of crumbs for them to follow, and you can draw paths for them in a way that looks quite like Flight Control, one of our favorite iPod/iPad games. To test his system, Micire steered a four-wheeled vehicle through a plywood maze.

Control This Robot With a Touchscreen: Mark Micire/UMass Lowell Robotics Lab

The system can integrate a variety of data sets, like city maps, building blueprints and more. You can pan and zoom in on any map point, and you can even integrate video feeds from individual robots so you can see things from their perspective.

As Micire describes it, current disaster-response methods can’t automatically compile and combine information to search for patterns. A smart system would integrate data from all kinds of sources, including commanders, individuals and robots in the field, computer generated risk models and more.

Emergency responders might not have the time or opportunity to get in-depth training on new technologies, so a simple touchscreen control system like this would be more useful. At the very least, it seems like a much more intuitive way to control future robot armies.