Wednesday, April 20, 2011

Ant navigation Robot

Tuesday, April 19, 2011

SONY DOG

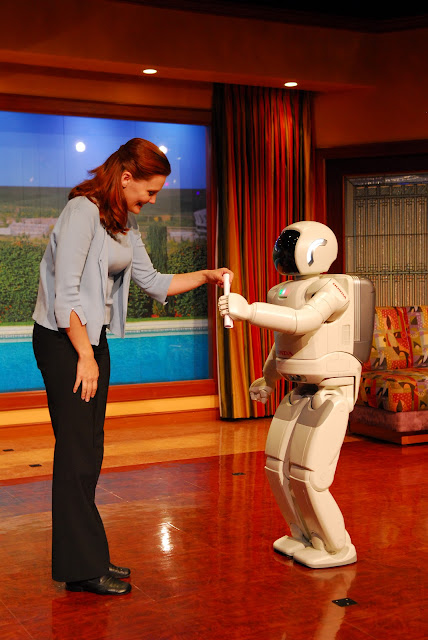

ASMIO Robot

Monday, April 18, 2011

Robots can Full of Love

However they are assisting the elderly, or simply popping human skulls like ripe fruit, robots aren't usually known for their light touch. And while this may be fine as long as they stay relegated to cleaning floors and assembling cars, as robots perform more tasks that put them in contact with human flesh, be it surgery or helping the blind, their touch sensitivity becomes increasingly important.

Thankfully, researchers at the University of Ghent, Belgium, have solved the problem of delicate robot touch.

Unlike the mechanical sensors currently used to regulate robotic touching, the Belgian researchers used optical sensors to measure the feedback. Under the robot skin, they created a web of optical beams. Even the faintest break in those beams registers in the robot's computer brain, making the skin far more sensitive than mechanical sensors, which are prone to interfering with each other.

Robots like the da Vinci surgery station already register feedback from touch, but a coating of this optical sensor-laden skin could vastly enhance the sensitivity of the machine. Additionally, a range of Japanese robots designed to help the elderly could gain a lighter touch with their sensitive charges if equipped with the skin.

Really, any interaction between human flesh and robot surfaces could benefit from the more lifelike touch provided by this sensor array. And to answer the question you're all thinking but won't say: yes. But please, get your mind out of the gutter. This is a family site.

Via New Scientist

Sunday, April 17, 2011

Swarm Robot

Use Microsoft Surface to Control With Your Fingertips

Sharp-looking tabletop touchscreen can be used to command robots and combine data from various sources, potentially improving military planning, disaster response and search-and-rescue operations.

Mark Micire, a graduate student at the University of Massachusetts-Lowell, proposes using Surface, Microsoft's interactive tabletop, to unite various types of data, robots and other smart technologies around a common goal. It seems so obvious and so simple, you have to wonder why this type of technology is not already widespread.

In defending his graduate thesis earlier this week, Micire showed off a demo of his swarm-control interface, which you can watch below.

You can tap, touch and drag little icons to command individual robots or robot swarms. You can leave a trail of crumbs for them to follow, and you can draw paths for them in a way that looks quite like Flight Control, one of our favorite iPod/iPad games. To test his system, Micire steered a four-wheeled vehicle through a plywood maze.

Control This Robot With a Touchscreen: Mark Micire/UMass Lowell Robotics Lab

The system can integrate a variety of data sets, like city maps, building blueprints and more. You can pan and zoom in on any map point, and you can even integrate video feeds from individual robots so you can see things from their perspective.

As Micire describes it, current disaster-response methods can’t automatically compile and combine information to search for patterns. A smart system would integrate data from all kinds of sources, including commanders, individuals and robots in the field, computer generated risk models and more.

Emergency responders might not have the time or opportunity to get in-depth training on new technologies, so a simple touchscreen control system like this would be more useful. At the very least, it seems like a much more intuitive way to control future robot armies.

Underwater Robot

Controlled by Underwater Tablets Show Off their Swimming Skills

The New Scientist has some great new video of our flippered friends.

The Aqua robots can be used in hard-to-reach spots like coral reefs, shipwrecks or caves.

Though the diver remains at at a safe distance, he can see everything the robot sees. Check out this robot’s-eye-view of a swimming pool.

Aqua robots are controlled by tablet computers encased in a waterproof shell. Motion sensors can tell how the waterproofed computer is tilted, and the robot moves in the same direction, New Scientist reports.

As we wrote earlier this summer, tablet-controlled robots working in concert with human divers would be much easier to command than undersea robots controlled from a ship. Plus, they just look so cute.

Willow Garage Robot

Willow Garage's playing Billiards

Proving that robots really do have a place at the pub-time to change your archaic anti-droid policies, Mos Eisley Cantina the team over at Willow Garage has programmed one of its PR2 robots to play a pretty impressive game of pool.

More impressively, they did it in just under one week.

In order to get the PR2 to make pool shark worthy shots, the team had to figure out how to make it recognize both the table and the balls, things that come easily to all but the thirstiest pool hall patrons.

PR2 used its high-res camera to locate and track balls and to orient itself to the table via the diamond markers on the rails.

It further oriented itself by identifying the table legs with its lower laser sensor.

Once the bot learned how to spatially identify the balls and the table, the team simply employed an open-source pool physics program to let the PR2 plan and execute its shots.

Murata Girl Robot

Murata Girl And Her Beloved Unicycle Murata

Following in the footsteps of many robots we’ve seen who perform awesome but random feats, Japanese electronics company Murata has revealed an update of their Little Seiko humanoid robot for 2010.

Murata Girl, like she is known, is 50 centimeters tall weighs six kilograms and can unicycle backwards and forwards. Whereas in her previous iteration, she could only ride across a straight balance beam, she is now capable of navigating an S-curve as thin as 2.5 centimeters only one centimeter wider than the tire of her unicycle .

The secret is a balancing mechanism that calculates the degree she needs to turn at to safely maneuver around the curves. She also makes use of a perhaps more rudimentary, but nonetheless effective, balancing mechanism and holds her arms stretched out to her sides,Nastia Liukin-style. Murata Girl is battery-powered, outfitted with a camera, and controllable via Bluetooth or Wi-Fi.

Also, because we know you were wondering, she’s a Virgo and her favorite pastime is (naturally) practicing riding her unicycle at the park.

Archer Robot Learns How To Aim and Shoot A Bow and Arrow

By using a learning algorithm, Italian researchers taught a child-like humanoid robot archery, even outfitting it with a spectacular headdress to celebrate its new ski .

Petar Kormushev, Sylvain Calinon and Ryo Saegusa of the Italian Institute of Technology developed an algorithm called “Archer,” for Augmented Reward Chained Regression.

The iCub robot is taught how to hold the bow and arrow, but then learns by itself how to aim and shoot the arrow so it hits the center of a target. Watch it learn below.

The researchers say this type of learning algorithm would be preferable to even their own reinforcement learning techniques, which require more input from humans.

The team used an iCub, a small humanoid robot designed to look like a 3 year old child. It was developed by a consortium of European universities with the goal of mimicking and understanding cognition, according to Technology Review.

It has several physical and visual sensors, and “Archer” takes advantage of them to provide more feedback than other learning algorithms, the researchers say.

The team will present their findings with the archery learning algorithm at the Humanoids 2010 conference in December.

NASA Robotic Plane

NASA's ARES Mars Plane Langley Research Center

Made to take Mars Exploration to the Skies

As a general rule, when NASA flies a scientific mission all the way to Mars, we expect that mission to last for a while.

For instance, the Spirit and Opportunity rovers were slated to run for three months and are still operating 6 years later. But one NASA engineer wants to send a mission all the way to the Red Planet that would last just two hours once deployed: a rocket-powered, robotic airplane that screams over the Martian landscape at more than 450 miles per hour.

ARES (Aerial Regional-Scale Environmental Surveyor) has been on the back burner for a while now, and while it's not the first Mars plane dreamed up by NASA it is the first one that very well might see some flight time over the Martian frontier.

Flying at about a mile above the surface, it would sample the environment over a large swath of area and collect measurements over rough, mountainous parts of the Martian landscape that are inaccessible by ground-based rovers and also hard to observe from orbiters.

The Mars plane would most likely make its flight over the southern hemisphere, where regions of high magnetism in the crust and mountainous terrain have presented scientists with a lot of mystery and not much data.

Enveloped in an aeroshell similar to the ones that deployed the rovers, ARES would detach from a carrier craft about 12 hours from the Martian surface. At about 20 miles up, the aeroshell would open, ARES would extend its folded wings and tail, and the rockets would fire. It sounds somewhat complicated, but compared with actually landing package full of sensitive scientific instruments on the surface deploying ARES is relatively simple.

The flight would only last for two hours, but during that short time ARES would cover more than 932 miles of previously unexplored territory, taking atmospheric measurements, looking for signs of water, collecting chemical sensing data, and studying crustal magnetism. Understanding the magnetic field in this region will tell researchers whether the magnetic fields there might shield the region of high-energy solar winds, which in turn has huge implications for future manned missions there.

The NASA team already has a half-scale prototype of ARES that has successfully performed deployment drills and wind tunnel tests that prove it will fly through the Martian atmosphere.

The team is now preparing the tech for the next NASA Mars mission solicitation and expects to see it tearing through Martian skies by the end of the decade.

( SPACE )

(PACO-PLUS) Robot can learning

Humanoids and Intelligence Systems Lab-Karlsruhe Institute for Technology

In 15 November 2010 Early risers may think it’s tough to fix breakfast first thing in the morning, but robots have it even harder.

Even get a cereal box is a challenge for your run of the mill artificial intelligence(AI).

Frosted Flakes come in a rectangular prism with colorful decorations, but so does your childhood copy of Chicken Little.

Do you need to teach the AI to read before it can give you breakfast?

Maybe no ! A team of European researchers has built a robot called ARMAR-III, which tries to learn not just from previously stored instructions or massive processing power but also from reaching out and touching things. Consider the cereal box By picking it up, the robot could learn that the cereal box weighs less than a similarly sized book, and if it flips the box over, cereal comes out.

Together with guidance and maybe a little scolding from a human coach, the robot . the result of the PACO-PLUS research project can build general representations of objects and the actions that can be applied to them.

[ The robot ] builds object representations through manipulation," explains Tamim Asfour of the Karlsruhe Institute of Technology, in Germany, who worked on the hardware side of the system .

The robot’s thinking is not separated from its body, because it must use its body to learn how to think. The idea, sometimes called embodied cognition, has a long history in the cognitive sciences, says psychologist Art Glenberg at Arizona State University in Tempe, who is not involved in the project.

All sorts of our thinking are in terms of how we can act in the world, which is determined jointly by characteristics of the world and our bodies.

Embodied cognition also requires sophisticated two-way communication between a robot’s lower-level sensors such as its hands and camera eyes and its higher-level planning processor.

The hope is that an embodied-cognition robot would be able to solve other sorts of problems unanticipated by the programmer, Glenberg says .

If ARMAR-III doesn’t know how to do something, it will build up a library of new ways to look at things or move things until its higher-level processor can connect the new dots.

The PACO-PLUS system’s masters tested it in a laboratory kitchen. After some rudimentary fumbling, the robot learned to complete tasks such as searching for, identifying, and retrieving a stray box of cereal in an unexpected location in the kitchen or ordering cups by color on a table.

It sounds trivial, but it isn’t," says team member Bernhard Hommel, a psychologist at Leiden University in the Netherlands. "He has to know what you’re talking about, where to look for it in the kitchen, even if it’s been moved, and then grasp it and return to you.

When trainers placed a cup in the robot’s way after asking it to set the table, it worked out how to move the cup out of the way before continuing the task. The robot wouldn’t have known to do that if it hadn’t already figured out what a cup is and that it’s movable and would get knocked down if left in place. These are all things the robot learned by moving its body around and exploring the scenery with its eyes.

ARMAR-III’s capabilities can be broken down into three categories: creating representations of objects and actions, understanding verbal instructions, and figuring out how to execute those instructions.

However, having the robot go through trial-and-error ways to figure out all three would just take too long. We weren’t successful in showing the complete cycle, Asfour says of the four year long project, which ended last summer.

Instead, they’d provide one of the three components and let the robot figure out the rest. The team ran experiments in which they gave the robot hints, sometimes by programming and sometimes by having human trainers demonstrate something.

For example, they would tell it, This is a green cup, instead of expecting the robot to take the time to learn the millions of shades of green.

Once it had the perception given to it, the robot could then proceed with figuring out what the user wanted it to do with the cup and planning the actions involved.

The key was the system’s ability to form representations of objects that worked at the sensory level and combine that with planning and verbal communications.

That’s our major achievement from a scientific point of view, Asfour says.

ARMAR-III may represent a new breed of robots that don’t try to anticipate every possible environmental input,

instead seeking out stimuli using their bodies to create a joint mental and physical map of the system .

possibilities Rolf Pfeifer of the University of Zurich, who was not involved in the project, says, "One of the basic insights of embodiment is that we generate environmental stimulation. We don’t just sit there like a computer waiting for stimulation.

This type of thinking mimics other insights from psychology, such as the idea that humans perceive their environment in terms that depend on their physical ability to interact with it. In short, a hill looks steeper when you’re tired. One study in 2008 found that when alone, people perceive hills as being less steep than they do when accompanied by a friend.

ARMAR-III’s progeny may even offer insights into how embodied cognition works in humans, Glenberg adds. "Robots offer a marvelous way of trying to test this sort of thinking.